Key Idea

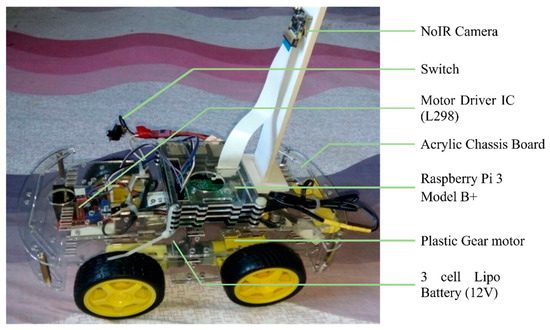

In this article the researchers assembled a small-vehicle prototype, to be used as a development platform for their research control-system architecture. The researchers’ objective was to develop a closed-loop autonomous small-scale vehicle equipped with only one sensor – a camera. The camera produces image data that is then fed into a deep learning model to process the image and output a control signal that is fed back to the vehicle, to control the direction of maneuver.

Top Technical Innovations

Top technical innovations:

- The ability to use deep learning models to process image data and produce control signals to control a physical system in real-time.

- The ability to achieve feedback control with limited sensor input; the deep-learning model predicts the vehicle steering angle based only one data point, an image.

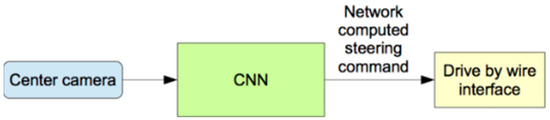

The researchers have done excellent work in developing a closed-loop feedback control system implementing novel deep-learning models into the control architecture. The simplicity of the autonomous vehicle’s control method is shown in the figure below.

The control process begins with a single camera producing image data that is fed into a convolution neural network (CNN) algorithm, the CNN performs classification of the image to decide whether the vehicle’s next travel command will be left, right, forward, or reverse. The details of how the control operation is accomplished in real-time is as follows:

- The camera takes a digital image.

- The image is passed through the raspberry pi micro-controller, which at this stage is strictly acting as a conduit for the image.

- The image moves from the raspberry pi to an onboard computer (which has higher-processing power than the micro-controller) via Transmission Control Protocol (TCP). The local onboard computer acts as the host, TCP as the server, and the raspberry pi as a client.

- The image is pre-processed (i.e., image augmentation) and processed through the CNN on the host computer; the reason for not performing this function on the raspberry pi is due to RAM constraints and the high latency that would result from running a computationally expensive model such as a pre-trained deep learning model on the less powerful micro-controller.

- The CNN classifies the image as left, right, forward, or reverse – the instruction referred to as the “steering angle,” indicating the next direction of maneuver for the vehicle. The classification classes of which were defined during the training phase of the deep learning neural network.

- The steering angle control signal is then sent to the raspberry pi via the same TCP communications link.

- With the steering instruction received, the raspberry pi then sends a command to the motor driver controller board module, which in turn distributes the necessary electric current to the wheel motors to maneuver the vehicle accordingly.

Conclusions

The researchers have proven that physical systems can be controlled through the combination of a single camera sensor, deep learning algorithms, simulator-generated images to train and test the system, and prosumer electronics. The system’s accuracy, that is, the algorithm’s ability to accurately predict the correct maneuver based on image data was reported to be 89.2%. This is a high accuracy score, however, in my opinion there is still an opportunity for improvement as this value can be interpreted as a 10.8% chance that the vehicle will make an incorrect turn and potentially lead to a collision.

“Delicar’s” latency calculation was measured at 0.12 seconds (120 ms) or about ![]() of a second, that is, the time required from image capture to produce a system response (maneuver – turn left, right, drive forward, or back). To give some perspective, according to Wikipedia, in satellite transmission – it takes about

of a second, that is, the time required from image capture to produce a system response (maneuver – turn left, right, drive forward, or back). To give some perspective, according to Wikipedia, in satellite transmission – it takes about ![]() of a second for data to be transmitted from one Earth-based ground station, to the satellite, then back down to a second ground station. Which indicates that Delicar’s data transmission protocol can be improved significantly. It is worth noting many large organizations whose sole-focus is autonomous systems, have dedicated teams to tackling the engineering challenge of minimizing latency (low latency is an attribute highly sought after in autonomous systems, especially where humans may be present, e.g., pedestrians, drivers, etc. where ms can be the difference between successful collision avoidance or not).

of a second for data to be transmitted from one Earth-based ground station, to the satellite, then back down to a second ground station. Which indicates that Delicar’s data transmission protocol can be improved significantly. It is worth noting many large organizations whose sole-focus is autonomous systems, have dedicated teams to tackling the engineering challenge of minimizing latency (low latency is an attribute highly sought after in autonomous systems, especially where humans may be present, e.g., pedestrians, drivers, etc. where ms can be the difference between successful collision avoidance or not).

Article citation: Chy, M. K., Masum, A. K., & Sayeed, K. A. (2022). Delicar: A Smart Deep Learning Based Self Driving Product Delivery Car in Perspective of Bangladesh. Sensors. doi:https://doi.org/10.3390/s22010126

Share: